A Comprehensive Study of Ordinary Linear Regression in Python

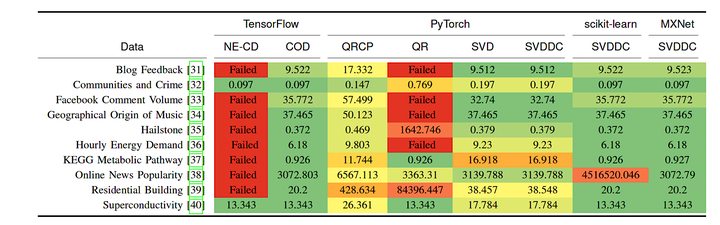

The Least Squares method is the oldest ML algorithm, but remains the most popular and ubiquitous across domains. Now, with powerful, cheap technology and the popular open-source language Python, users of every sort have access to various libraries, all serving ordinary least squares. In this work, we ask the question of whether users can count on the different implementations ceteris paribus producing the same results. We conduct a comprehensive survey of current Python implementations of the Least Squares method, providing a comparative analysis of their features, performance (time and space). Additionally, we build datasets that are degenerate with respect to models to examine behavior in this decade of very big data. Our investigation covers the most popular and well-established libraries: TensorFlow, PyTorch, scikit-learn, and MXNet. Our results unexpectedly show that sufficient significant differences exist such that users must scrutinize their choices and not confine themselves to a single library.